|

| Nobel Laureate Sydney Brenner has criticized systems biology as a grandiose attempt to solve inverse problems in biology |

Leo Szilard – brilliant, peripatetic Hungarian physicist, habitué of hotel lobbies, soothsayer without peer – first grasped the implications of a nuclear chain reaction in 1933 while stepping off the curb at a traffic light in London. Szilard has many distinctions to his name; not only did he file a patent for the first nuclear reactor with Enrico Fermi, but he was the one who urged his old friend Albert Einstein to write a famous letter to Franklin Roosevelt, and also the one who tried to get another kind of letter signed as the war was ending in 1945; a letter urging the United States to demonstrate a nuclear weapon in front of the Japanese before irrevocably stepping across the line. Szilard was successful in getting the first letter signed but failed in his second goal.

After the war ended, partly disgusted by the cruel use to which his beloved physics had been put, Szilard left professional physics to explore new pastures – in his case, biology. But apart from the moral abhorrence which led him to switch fields, there was a more pragmatic reason. As Szilard put it, this was an age when you took a year to discover something new in physics but only took a day to discover something new in biology.

This sentiment drove many physicists into biology, and the exodus benefited biological science spectacularly. Compared to physics whose basic theoretical foundations had matured by the end of the war, biology was uncharted territory. The situation in biology was similar to the situation during the heyday of physics right after the invention of quantum theory when, as Paul Dirac quipped, “even second-rate physicists could make first-rate discoveries”. And physicists took full advantage of this situation. Since Szilard, biology in general and molecular biology in particularly have been greatly enriched by the presence of physicists. Today, any physics student who wants to mull doing biology stands on the shoulders of illustrious forebears including Szilard, Erwin Schrodinger, Francis Crick, Walter Gilbert and most recently, Venki Ramakrishnan.

What is it that draws physicists to biology and why have they been unusually successful in making contributions to it? The allure of understanding life which attracts other kinds of scientists is certainly one motivating factor. Erwin Schrodinger whose little book “What is Life?” propelled many including Jim Watson and Francis Crick into genetics is one example. Then there is the opportunity to simplify an enormously complex system into its constituent parts, an art which physicists have excelled at since the time of the Greeks. Biology and especially the brain is the ultimate complex system, and physicists are tempted to apply their reductionist approaches to deconvolute this complexity. Thirdly there is the practical advantage that physicists have; a capacity to apply experimental tools like x-ray diffraction and quantitative reasoning including mathematical and statistical tools to make sense of biological data.

The rise of the data scientists

It it this third reason that has led to a significant influx of not just physicists but other quantitative scientists, including statisticians and computer scientists, into biology. The rapid development of the fields of bioinformatics and computational biology has led to a great demand for scientists with the quantitative skills to analyze large amounts of data. A mathematical background brings valuable skills to this endeavor and quantitative, data-driven scientists thrive in genomics. Eric Lander for instance got his PhD in mathematics at Oxford before – driven by the tantalizing goal of understanding the brain – he switched to biology. Cancer geneticist Bert Vogelstein also has a background in mathematics. All of us are familiar with names like Craig Venter, Francis Collins and James Watson when it comes to appreciating the cracking of the human genome, but we need to pay equal attention to the computer scientists without whom crunching and combining the immense amounts of data arising from sequencing would have been impossible. There is no doubt that, after the essentially chemically driven revolution in genetics of the 70s, the second revolution in the field has been engineered by data crunching.

So what does the future hold? The rise of the “data scientists” has led to the burgeoning field of systems biology, a buzzword which seems to proliferate more than its actual understanding. Systems biology seeks to integrate different kinds of biological data into a broad picture using tools like graph theory and network analysis. It promises to potentially provide us with a big-picture view of biology like no other. Perhaps, physicists think, we will have a theoretical framework for biology that does what quantum theory did for, say, chemistry.

Emergence and systems biology: A delicate pairing

And yet even as we savor the fruits of these higher-level approaches to biology, we must be keenly aware of their pitfalls. One of the fundamental truths about the physicists’ view of biology is that it is steeped in reductionism. Reductionism is the great legacy of modern science which saw its culmination in the two twentieth-century scientific revolutions of quantum mechanics and molecular biology. It is hard to overstate the practical ramifications of reductionism. And yet as we tackle the salient problems in twenty-first century biology, we are become aware of the limits of reductionism. The great antidote to reductionism is emergence, a property that renders complex systems irreducible to the sum of their parts. In 1972 the Nobel Prize winning physicist Philip Anderson penned a remarkably far-reaching article named “More is Different” which explored the inability of “lower-level” phenomena to predict their “higher-level” manifestations.

The brain is an outstanding example of emergent phenomena. Many scientists think that neuroscience is going to be to the twenty-first century what molecular biology was to the twentieth. For the first time in history, partly through recombinant DNA technology and partly due to state-of-the-art imaging techniques like functional MRI, we are poised on the brink of making major discoveries about the brain; no wonder that Francis Crick moved into neuroscience during his later years. But the brain presents a very different kind of challenge than that posed by, say, a superconductor or a crystal of DNA. The brain is a highly hierarchical and modular structure, with multiple dependent and yet distinct layers of organization. From the basic level of the neuron we move onto collections of neurons and glial cells which behave very differently, onward to specialized centers for speech, memory and other tasks on to the whole brain. As we move up this ladder of complexity, emergent features arise at every level whose behavior cannot be gleaned merely from the behavior of individual neurons.

The tyranny of inverse problems

The tyranny of inverse problems

The problem thwarts systems biology in general. In recent years, some of the most insightful criticism of systems biology has come from Sydney Brenner, a founding father of molecular biology whose 2010 piece in Philosophical Transactions of the Royal Society titled “Sequences and Consequences” should be required reading for those who think that systems biology’s triumph is just around the corner. In his essay, Brenner strikes at what he sees as the heart of the goal of systems biology. After reminding us that the systems approach seeks to generate viable models of living systems, Brenner goes on to say that:

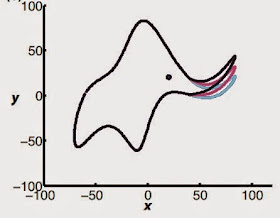

“Even though the proponents seem to be unconscious of it, the claim of systems biology is that it can solve the inverse problem of physiology by deriving models of how systems work from observations of their behavior. It is known that inverse problems can only be solved under very specific conditions. A good example of an inverse problem is the derivation of the structure of a molecule from the X-ray diffraction pattern of a crystal…The universe of potential models for any complex system like the function of a cell has very large dimensions and, in the absence of any theory of the system, there is no guide to constrain the choice of model.”

What Brenner is saying that every systems biology project essentially results in a model, a model that tries to solve the problem of divining reality from experimental data. However, a model is not reality; it is an imperfect picture of reality constructed from bits and pieces of data. It is therefore – and this has to be emphasized – only one representation of reality. Other models might satisfy the same experimental constraints and for systems with thousands of moving parts like cells and brains, the number of models is astronomically large. In addition, data in biological measurements is often noisy with large error bars, further complicating its use. This puts systems biology into the classic conundrum of the inverse problem that Brenner points out, and like other inverse problems, the solution you find is likely to be one among an expanding universe of solutions, many of which might be better than the one you have. This means that while models derived from systems biology might be useful – and often this is a sufficient requirement for using them – they might likely leave out some important feature of the system.

There has been some very interesting recent work in addressing such conundrums. One of the major challenges in the inverse problem universe is to find a minimal set of parameters that can describe a system. Ideally the parameters should be sensitive to variation so that one constrains the parameter space describing the given system and avoids the "anything goes" trap. A particularly promising example is the use of 'sloppy models' developed by Cornell physicist James Sethna and others in which parameter combinations rather than individual parameters are varied and those combinations which are most tightly constrained are then picked as the 'right' ones.

But quite apart from these theoretical fixes, Brenner’s remedy for avoiding the fallout from imperfect systems modeling is to simply use the techniques garnered from classical biochemistry and genetics over the last century or so. In one sense systems biology is nothing new; as Brenner tartly puts it, “there is a watered-down version of systems biology which does nothing more than give a new name to physiology, the study of function and the practice of which, in a modern experimental form, has been going on at least since the beginning of the Royal Society in the seventeenth century”. Careful examination of mutant strains of organisms, measurement of the interactions of proteins with small molecules like hormones, neurotransmitters and drugs, and observation of phenotypic changes caused by known genotypic perturbations remain tried-and-tested ways of drawing conclusions about the behavior of living systems on a molecular scale.

But quite apart from these theoretical fixes, Brenner’s remedy for avoiding the fallout from imperfect systems modeling is to simply use the techniques garnered from classical biochemistry and genetics over the last century or so. In one sense systems biology is nothing new; as Brenner tartly puts it, “there is a watered-down version of systems biology which does nothing more than give a new name to physiology, the study of function and the practice of which, in a modern experimental form, has been going on at least since the beginning of the Royal Society in the seventeenth century”. Careful examination of mutant strains of organisms, measurement of the interactions of proteins with small molecules like hormones, neurotransmitters and drugs, and observation of phenotypic changes caused by known genotypic perturbations remain tried-and-tested ways of drawing conclusions about the behavior of living systems on a molecular scale.

Genomics and drug discovery: Tread softly

This viewpoint is also echoed by those who take a critical view of what they say is an overly genomics-based approach to the treatment of diseases. A particularly clear-headed view comes from Gerry Higgs who in 2004 presciently wrote a piece titled “Molecular Genetics: The Emperor’s Clothes of Drug Discovery”. Higgs criticizes the whole gamut of genomic tools used to discover new therapies, from the “high-volume, low-quality sequence data” to the genetically engineered cell lines which can give a misleading impression of molecular interactions under normal physiological conditions. Higgs points to many successful drugs discovered in the last fifty years which have been found using the tools of classical pharmacology and biochemistry; these would include the best-selling, Nobel Prize winning drugs developed by Gertrude Elion and James Black based on simple physiological assays. Higgs’s point is that the genomics approach to drugs runs the risk of becoming too reductionist and narrow-minded, often relying on isolated systems and artificial constructs that are uncoupled from whole systems. His prescription is not to discard these tools which can undoubtedly provide important insights, but supplement them with older and proven physiological experiments.

Does all this mean that systems biology and genomics would be useless in leading us to new drugs? Not at all. There is no doubt that genomic approaches can be remarkably useful in enabling controlled experiments. The systems biologist Leroy Hood for instance has pointed out how selective gene silencing can allow us to tease apart side-effects of drugs from beneficial ones. But what Higgs, Brenner and others are impressing upon us is that we shouldn’t allow genomics to become the end-all and be-all of drug discovery. Genomics should only be employed as part of a judiciously chosen cocktail of techniques including classical ones for interrogating the function of living systems. And this applies more generally to physics-based and systems biology approaches.

Perhaps the real problem from which we need to wean ourselves is “physics envy”; as the physicist-turned-financial modeler Emanuel Derman reminds us, “Just like physicists, we would like to discover three laws that govern ninety-nine percent of our system’s intricacies. But we are more likely to discover ninety-nine laws that explain three percent of our system”. And that’s as good a starting point as any.

Adapted from a previous post on Scientific American Blogs.

Perhaps the real problem from which we need to wean ourselves is “physics envy”; as the physicist-turned-financial modeler Emanuel Derman reminds us, “Just like physicists, we would like to discover three laws that govern ninety-nine percent of our system’s intricacies. But we are more likely to discover ninety-nine laws that explain three percent of our system”. And that’s as good a starting point as any.

Adapted from a previous post on Scientific American Blogs.