Newton rightly decried that science progresses by standing on the shoulders of giants. But his often-quoted statement applies even more broadly than he thought. A case in point: when it comes to the discovery of DNA, how many have heard of Friedrich Miescher, Fred Griffith or Lionel Alloway? Miescher was the first person to isolate DNA, from pus bandages of patients. Fred Griffith performed the crucial experiment that proved that a ‘transforming principle’ was somehow passing from a virulent dead bacterium to a non-virulent live bacterium, magically rendering the non-virulent strain virulent. Lionel Alloway came up with the first expedient method to isolate DNA by adding alcohol to a concentrated solution.

- Home

- Angry by Choice

- Catalogue of Organisms

- Chinleana

- Doc Madhattan

- Games with Words

- Genomics, Medicine, and Pseudoscience

- History of Geology

- Moss Plants and More

- Pleiotropy

- Plektix

- RRResearch

- Skeptic Wonder

- The Culture of Chemistry

- The Curious Wavefunction

- The Phytophactor

- The View from a Microbiologist

- Variety of Life

Field of Science

-

Change of address2 months ago in Variety of Life

-

Change of address2 months ago in Catalogue of Organisms

-

-

Earth Day: Pogo and our responsibility4 months ago in Doc Madhattan

-

What I Read 20245 months ago in Angry by Choice

-

I've moved to Substack. Come join me there.7 months ago in Genomics, Medicine, and Pseudoscience

-

-

-

-

-

Histological Evidence of Trauma in Dicynodont Tusks6 years ago in Chinleana

-

Posted: July 21, 2018 at 03:03PM7 years ago in Field Notes

-

Why doesn't all the GTA get taken up?7 years ago in RRResearch

-

-

Harnessing innate immunity to cure HIV9 years ago in Rule of 6ix

-

-

-

-

-

-

post doc job opportunity on ribosome biochemistry!10 years ago in Protein Evolution and Other Musings

-

Blogging Microbes- Communicating Microbiology to Netizens10 years ago in Memoirs of a Defective Brain

-

Re-Blog: June Was 6th Warmest Globally11 years ago in The View from a Microbiologist

-

-

-

The Lure of the Obscure? Guest Post by Frank Stahl13 years ago in Sex, Genes & Evolution

-

-

Lab Rat Moving House14 years ago in Life of a Lab Rat

-

Goodbye FoS, thanks for all the laughs14 years ago in Disease Prone

-

-

Slideshow of NASA's Stardust-NExT Mission Comet Tempel 1 Flyby14 years ago in The Large Picture Blog

-

in The Biology Files

Book review: "Unraveling the Double Helix: The Lost Heroes of DNA", by Gareth Williams.

Brian Greene and John Preskill on Steven Weinberg

There's a very nice tribute to Steven Weinberg by Brian Greene and John Preskill that I came across recently that is worth watching. Weinberg was of course one of the greatest theoretical physicists of the later half of the 20th century, winning the Nobel Prize for one of the great unifications of modern physics, which was the unification of the electromagnetic and the weak forces. He was also a prolific author of rigorous, magisterial textbooks on quantum field theory, gravitation and other aspects of modern physics. And on top of it all, he was a true scholar and gifted communicator of complex ideas to the general public through popular books and essays; not just ideas in physics but ones in pretty much any field that caught his fancy. I had the great pleasure and good fortune to interact with him twice.

The conversation between Greene and Preskill is illuminating because it sheds light on many underappreciated qualities of Weinberg that enabled him to become a great physicist and writer, qualities that are worth emulating. Greene starts out by talking about when he first interacted with Weinberg when he gave a talk as a graduate student at the physics department of the University of Texas at Austin where Weinberg taught. He recalls how he packed the talk with equations and formal derivations, only to have the same concepts explained by Weinberg more clearly later. As physicists appreciate, while mathematics remains the key to unlock the secrets of the universe, being able to understand the physical picture is key. Weinberg was a master at doing both.

Preskill was a graduate student of Weinberg's at Harvard and he talks about many memories of Weinberg. One of the more endearing and instructive ones is from when he introduced Weinberg to his parents at his house. They were making ice cream for dinner, and Weinberg wondered aloud why we add salt while making the ice cream. By that time Weinberg had already won the Nobel Prize, so Preskill's father wondered if he genuinely didn't understand that you add the salt to lower the melting point of the ice cream so that it would stay colder longer. When Preskill's father mentioned this Weinberg went, "Of course, that makes sense!". Now both Preskill and Greene think that Weinberg might have been playing it up a bit to impress Preskill's family, but I wouldn't be surprised if he genuinely did not know; top tier scientists who work in the most rarefied heights of their fields are sometimes not as connected to basic facts as graduate students might be.

More importantly, in my mind the anecdote illustrates an important quality that Weinberg had and that any true scientist should have, which is to never hesitate to ask even simple questions. If, as a Nobel Prize winning scientist, you think you are beyond asking simple questions, especially when you don't know the answers, you aren't being a very good scientist. The anecdote demonstrates a bigger quality that Weinberg had which Preskill and Greene discuss, which was his lifelong curiosity about things that he didn't know. He never hesitated to pump people for information about aspects of physics he wasn't familiar with, not to mention another disciplines. Freeman Dyson who I knew well had the same quality: both Weinberg and Dyson were excellent listeners. In fact, asking the right question, whether it was about salt and ice cream or about electroweak unification, seems to have been a signature Weinberg quality that students should take to heart.

Weinberg became famous for a seminal 1967 paper that unified the electromagnetic and weak force (and used ideas developed by Peter Higgs to postulate what we now call the Higgs boson). The title of the paper was "A Model of Leptons", but interestingly, Weinberg wasn't much of a model builder. As Preskill says, he was much more interested in developing general, overarching theories than building models, partly because models have a limited applicability to a specific domain while theories are much more general. This is a good point, but of course, in fields like my own field of computational chemistry, the problem isn't that there are no general theoretical frameworks - there are, most notably the frameworks of quantum mechanics and statistical mechanics - but that applying them to practical problems is too complicated unless we build specific models. Nevertheless, Weinberg's attitude of shunning specific models for generality is emblematic of the greatest scientists, including Newton, Pauling, Darwin and Einstein.

Weinberg was also a rather solitary researcher; as Preskill points out, of his 50 most highly cited papers, 42 are written alone. He admitted himself in a talk that he wasn't the best collaborator. This did not make him the best graduate advisor either, since while he was supportive, his main contribution was more along the lines of inspiration rather than guidance and day-to-day conversations. He would often point students to papers and ask them to study them themselves, which works fine if you are Brian Greene or John Preskill but perhaps not so much if are someone else. In this sense Weinberg seems to be have been a bit like Richard Feynman who was a great physicist but who also wasn't the best graduate advisor.

Finally, both Preskill and Greene touch upon Weinberg's gifts as a science writer and communicator. More than many other scientists, he never talked down to his readers because he understood that many of them were as smart as him even if they weren't physicists. Read any one of his books and you see him explaining even simple ideas, but never in a way that assumes his audience are dunces. This is a lesson that every scientist and science writer should take to heart.

Greene especially knew Weinberg well because he invited him often to the World Science Festival which he and his wife had organized in New York over the years. The tribute includes snippets from Weinberg talking about the current and future state of particle physics. In the last part, an interviewer asks him about what is arguably the most famous sentence from his popular writings. In the last part of his first book, "The First Three Minutes", he says, "The more the universe seems comprehensible, the more it seems pointless." Weinberg's eloquent response when he was asked what this means sums up his life's philosophy and tells us why he was so unique, as a scientist and as a human being:

"Oh, I think everything's pointless, in the sense that there's no point out there to be discovered by the methods of science. That's not to say that we don't create points for our lives. For many people it's their loved ones; living a life of helping people you love, that's all the point that's needed for many people. That's probably the main point for me. And for some of us there's a point in scientific discovery. But these points are all invented by humans and there's nothing out there that supports them. And it's better that we not look for it. In a way, we are freer, in a way it's more noble and admirable to give points to our lives ourselves rather than to accept them from some external force."

A long time ago, in a galaxy far, far away

For a brief period earlier this week, social media and the world at large seemed to stop squabbling about politics and culture and united in a moment of wonder as the James Webb Space Telescope (JWST) released its first stunning images of the cosmos. These "extreme deep field" images represent the farthest and the oldest that we have been able to see in the universe, surpassing even the amazing images captured by the Hubble Space Telescope that we have become so familiar with. We will soon see these photographs decorating the walls of classrooms and hospitals everywhere.

The scale of the JWST images is breathtaking. Each dot represents a galaxy or nebula from far, far away. Each galaxy or nebula is home to billions of stars in various stages of life and death. The curved light in the image comes from a classic prediction of Einstein's general theory of relativity called gravitational lensing - the bending of light by gravity that makes spacetime curvature act like a lens.

Some of the stars in these distant galaxies and nebulae are being nurtured in stellar nurseries; others are in their end stages and might be turning into neutron stars, supernovae or black holes. And since galaxies have been moving away from us because of the expansion of the universe, the farther out we see, the older the galaxy is. This makes the image a gigantic hodgepodge of older and newer photographs, ranging from objects that go as far back as 100 million years after the Big Bang to very close (on a cosmological timescale) objects like Stephan's Quintet and the Carina Nebula that are only a few tens of thousands of light years away.

It is a significant and poignant fact that we are seeing objects not as they are but as they were. The Carina Nebula is 8,500 light years away, so we are seeing it as it looked like 8,500 years ago, during the Neolithic Age when humanity had just taken to farming and agriculture. On the oldest timescale, objects that are billions of light years away look the way did during the universe's childhood. The fact that we are seeing old photographs or stars, galaxies and nebulae gives the photo a poignant quality. For a younger audience who has always grown up with Facebook, imagine seeing a hodgepodge of images of people from Facebook over the last fifteen years presented to you: some people are alive and some people no longer so, some people look very different from what they did when their photo was last taken. It would be a poignant feeling. But the JWST image also fills me with joy. Looking at the vast expanse, the universe feels not like a cold, inhospitable place but like a living thing that's pulsating with old and young blood. We are a privileged part of this universe.

There's little doubt that one of the biggest questions stimulated by these images would be whether we can detect any signatures of life on one of the many planets orbiting some of the stars in those galaxies. By now we have discovered thousands of extrasolar planets around the universe, so there's no doubt that there will be many more in the regions the JWST is capturing. The analysis of the telescope data already indicates a steamy atmosphere containing water on a planet about 1,150 light years away. Detecting elements like nitrogen, carbon, sulfur and phosphorus is a good start to hypothesizing about the presence of life, but much more would be needed to clarify whether these elements arise from an inanimate process or a living one. It may seem impossible that a landscape as gargantuan as this one is completely barren of life, but given the improbability of especially intelligent life arising through a series of accidents, we may have to search very wide and long.

I was gratified as my twitter timeline - otherwise mostly a cesspool of arguments and ad hominem attacks punctuated by all-too-rare tweets of insight - was completely flooded with the first images taken by the JWST. The images proved that humanity is still capable of coming together and focusing on a singular achievement of science and technology, how so ever briefly. Most of all, they prove both that science is indeed bigger than all of us and that we can comprehend it if we put our minds and hands together. It's up to us to decide whether we distract ourselves and blow ourselves up with our petty disputes or explore the universe as revealed by JWST and other feats of human ingenuity in all its glory.

Image credits: NASA, ESA, CSA and STScl

Book Review: "The Rise and Reign of the Mammals: A New History, From the Shadows of the Dinosaurs to US", by Steve Brusatte

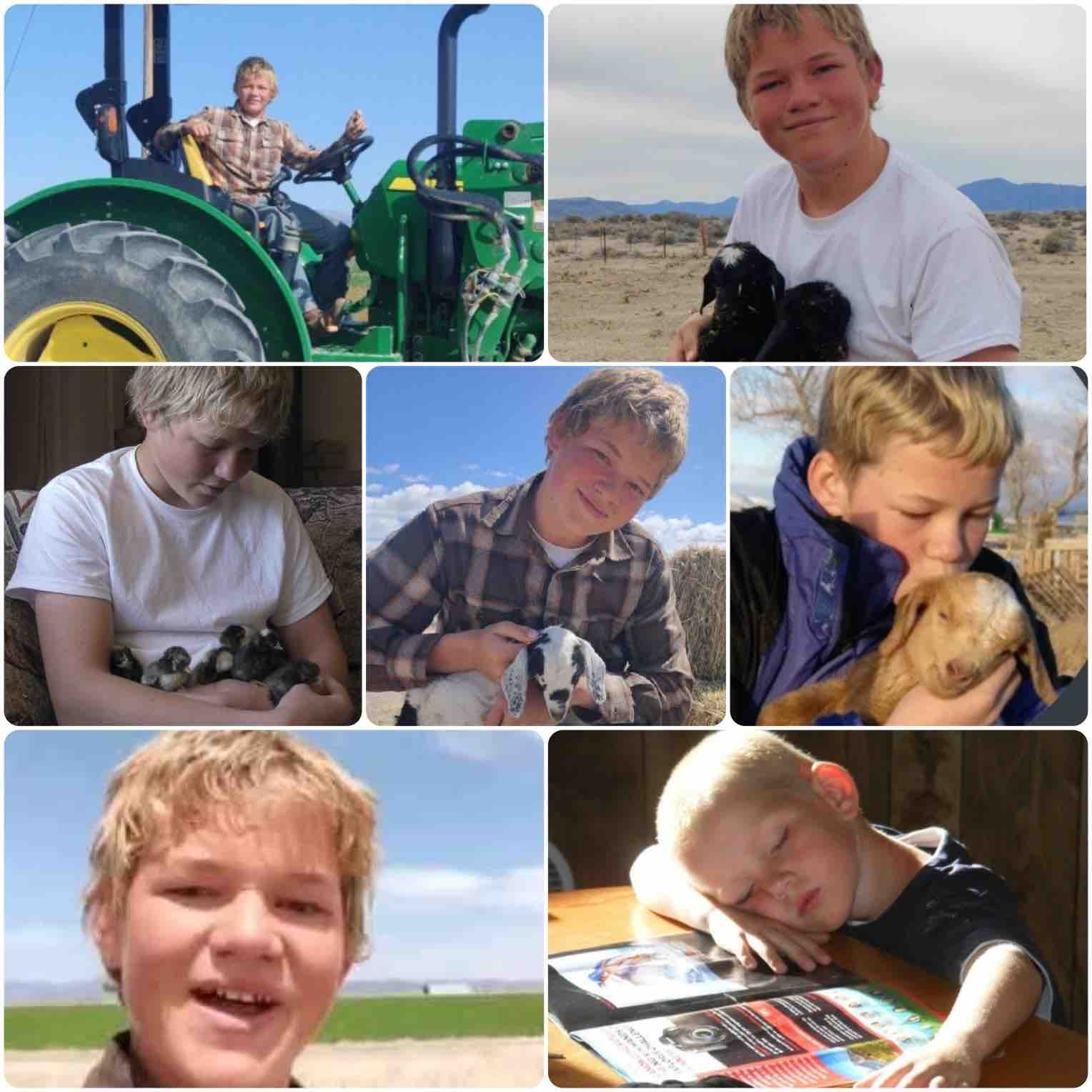

Book Review: "Don't Tell Me I Can't: An Ambitious Homeschooler's Journey", by Cole Summers (Kevin Cooper)

I finished this book with a profound sense of loss combined with an inspired feeling of admiration for what young people can do. Cole Summers grew up in the Great Basin Desert region of Nevada and Utah with a father who had tragically become confined to a wheelchair after an accident in military training. His parents were poor but they wanted Cole to become an independent thinker and doer. Right from when he was a kid, they never said "No" to him and let him try out everything that he wanted to. When four-year-old Cole wanted to plant and grow a garden, they let him, undeterred by the minor cuts and injuries on the way.

Should a scientist have "faith"?

Scientists like to think that they are objective and unbiased, driven by hard facts and evidence-based inquiry. They are proud of saying that they only go wherever the evidence leads them. So it might come as a surprise to realize that not only are scientists as biased as non-scientists, but that they are often driven as much by belief as are non-scientists. In fact they are driven by more than belief: they are driven by faith. Science. Belief. Faith. Seeing these words in a sentence alone might make most scientists bristle and want to throw something at the wall or at the writer of this piece. Surely you aren’t painting us with the same brush that you might those who profess religious faith, they might say?

But there’s a method to the madness here. First consider what faith is typically defined as – it is belief in the absence of evidence. Now consider what science is in its purest form. It is a leap into the unknown, an extrapolation of what is into what can be. Breakthroughs in science by definition happen “on the edge” of the known. Now what sits on this edge? Not the kind of hard evidence that is so incontrovertible as to dispel any and all questions. On the edge of the known, the data is always wanting, the evidence always lacking, even if not absent. On the edge of the known you have wisps of signal in a sea of noise, tantalizing hints of what may be, with never enough statistical significance to nail down a theory or idea. At the very least, the transition from “no evidence” to “evidence” lies on a continuum. In the absence of good evidence, what does a scientist do? He or she believes. He or she has faith that things will work out. Some call it a sixth sense. Some call it intuition. But “faith” fits the bill equally.

If this reliance on faith seems like heresy, perhaps it’s reassuring to know that such heresies were committed by many of the greatest scientists of all time. All major discoveries, when they are made, at first rely on small pieces of data that are loosely held. A good example comes from the development of theories of atomic structure.

When Johannes Balmer came up with his formula for explaining the spectral lines of hydrogen, he based his equation on only four lines that were measured with accuracy by Anders Ångström. He then took a leap of faith and came up with a simple numerical formula that predicted many other lines emanating from the hydrogen atom and not just four. But the greatest leap of faith based on Balmer’s formula was taken by Niels Bohr. In fact Bohr did not even hesitate to call it anything but a leap of faith. In his case, the leap of faith involved assuming that electrons in atoms only occupy certain discrete energy states, and that figuring out the transitions between these states somehow involved Planck’s constant in an important way. When Bohr could reproduce Balmer’s formula based on this great insight, he knew he was on the right track, and physics would never be the same. One leap of faith built on another.

To a 21st century scientist, Bohr’s and Balmer’s thinking as well as that of many other major scientists well through the 20th century indicates a manifestly odd feature in addition to leaps of faith – an absence of what we call statistical significance or validation. As noted above, Balmer used only four data points to come up with his formula, and Bohr not too many more. Yet both were spectacularly right. Isn’t it odd, from the standpoint of an age that holds statistical validation sacrosanct, to have these great scientists make their leaps of faith based on paltry evidence, “small data” if you will? But that in fact is the whole point about scientific belief, that it originates precisely when there isn’t copious evidence to nail the fact, when you are still on shaky ground and working at the fringe. But this belief also supremely echoes a famous quote by Bohr’s mentor Rutherford – “If your experiment needs statistics, you ought to have done a better experiment.” Resounding words from the greatest experimental physicist of the 20th century whose own experiments were so carefully chosen that he could deduce from them extraordinary truths about the structure of matter based on a few good data points.

The transition between belief and fact in science in fact lies on a continuum. There are very few cases where a scientist goes overnight from a state of “belief” to one of “knowledge”. In reality, as evidence builds up, the scientist becomes more and more confident until there are not enough grounds for believing otherwise. In many cases the scientist may not even be alive to see his or her theory confirmed in all its glory: even the Newtonian model of the solar system took until the middle of the 19th century to be fully validated, more than a hundred years after Newton’s death.

A good example of this gradual transition of a scientific theory from belief to confident espousal is provided by the way Charles Darwin’s theory of evolution by natural selection, well, evolved. It’s worth remembering that Darwin took more than twenty years to build up his theory after coming home from his voyage on the HMS Beagle in 1836. At first he only had hints of an idea based on extensive and yet uncatalogued and disconnected observations of flora and fauna from around the world. Some of the evidence he had documented – the names of Galapagos finches, for instance – was wrong and had to be corrected by his friends and associates. It was only by arduous experimentation and cataloging that Darwin – a famously cautious man – was able to reach the kind of certainty that prompted him to finally publish his magnum opus, Origin of Species, in 1859, and even then only after he was threatened to be scooped by Alfred Russell Wallace. There can be said to be no one fixed eureka moment when Darwin could say that he had transitioned from “believing” in evolution by natural selection to “knowing” that evolution by natural selection was true. And yet, by 1859, this most meticulous scientist was clearly confident enough in his theory that he no longer simply believed in it. But it certainly started out that way. The same uncertain transition between belief and knowledge applies to other discoveries. Einstein often talked about his faith in his general theory of relativity before observations of the solar eclipse of 1919 confirmed its major prediction, the bending of starlight by gravity, remarking that if he was wrong it would mean that the good lord had led him down the wrong garden path. When did Watson and Crick go from believing that DNA is a double helix to knowing that it is? When did Alfred Wegener go from believing in plate tectonics to knowing that it was real? In some sense the question is pointless. Scientific knowledge, both individually and collectively, gets cemented with greater confidence over time until the objections simply cannot stand up to the weight of the accumulated evidence.

Faith, at least in one important sense, is thus an important part of the mindset of a scientist. So why should scientists not nod in assent if someone then tells them that there is no difference, at least in principle, between their faith and religious faith? For two important reasons. Firstly, the “belief” that a scientist has is still based on physical and not supernatural evidence, even if all the evidence may not yet be there. What scientists call faith is still based on data and experiments, not mystic visions and pronouncements from a holy book. More importantly, unlike religious belief, scientific belief can wax and wane with the evidence; it importantly is tentative and always subject to change. Any good scientist who believes X will be ready to let go of their belief in X if strong evidence to the contrary presents itself. That is in fact the main difference between scientists on one hand and clergymen and politicians on the other; as Carl Sagan once asked, when was the last time you heard either of the latter say, “You know, that’s a really good counterargument. Maybe what I am saying is not true after all.”

Faith may also interestingly underlie one of the classic features of great science – serendipity. Unlike what we often believe, serendipity does not always refer to pure unplanned accident but to deliberately planned accident; as Alexander Fleming memorably put it, chance favors the “prepared mind”. A remarkable example of deliberate serendipity comes from an anecdote about his discovery of slow neutrons that Enrico Fermi narrated to Subrahmanyan Chandrasekhar. Slow neutrons unlocked the door to nuclear power and the atomic age. Fermi told Chandrasekhar how he came to make this discovery which he personally considered – among a dozen seminal ones – to be his most important one (From Mehra and Rechenberg, “The Historical Development of Quantum Theory, Vol. 6”):

Chandrasekhar’s invocation of Hadamard’s thesis of unconscious discovery might provide a rational underpinning for what we are calling faith. In this case, Fermi’s great intuitive jump, his seemingly irrational faith that paraffin might slow down neutrons, might have been grounded in the extensive body of knowledge about physics that was housed in his brain, forming connections that he wasn’t even aware of. Not every leap of faith can be explained this way, but some can. In this sense a scientist’s faith, unlike religious faith, is very much rational and based on known facts.

Ultimately there’s a supremely important guiding role that faith plays in science. Scientists ignore believing at their own peril. This is because they have to constantly tread the tightrope of skepticism and wonder. Shut off your belief valve completely and you will never believe anything until there is five-sigma statistical significance for it. Promising avenues of inquiry that are nonetheless on shaky grounds for the moment will be dismissed by you. You may never be the first explorer into rich new scientific territory. But open the belief valve completely and you will have the opposite problem. You may believe anything based on the flimsiest of evidence, opening the door to crackpots and charlatans of all kinds. So where do you draw the line?

In my mind there are a few logical rules of thumb that might help a scientist to mark out territories of non-belief from ones where leaps of faith might be warranted. In my mind, plausibility based on the known laws of science should play a big role. For instance, belief in homeopathy would be mistaken based on the most elementary principles of physics and chemistry, including the laws of mass action and dose response. But what about belief in extraterrestrial intelligence? There the situation is different. Based on our understanding of the laws of quantum theory, stellar evolution and biological evolution, there is no reason to believe that life could not have arisen on another planet somewhere in the universe. In this sense, belief in extraterrestrial intelligence is justified belief, even if we don’t have a single example of life existing anywhere else. We should keep on looking. Faith in science is also more justified when there is a scientific crisis. In a crisis you are on desperate grounds anyway, so postulating ideas that aren’t entirely based on good evidence isn’t going to make matters worse and are more likely to lead into novel territory. Planck’s desperate assumption that energy only comes in discrete packets was partly an act of faith that resolved a crisis in classical physics.

Ultimately, though, drawing a firm line is always hard, especially for topics on the fuzzy boundary. Extra-sensory perception, the deep hot biosphere and a viral cause for mad cow disease are three theories which are implausible although not impossible in principle; there is little in them that flies against the basic laws of science. The scientists who believe in these theories are sticking their necks out and taking a stand. They are heretics who are taking the risk of being called fools; since most bold new ideas in science are usually wrong, they often will be. But they are setting an august precedent.

If science is defined as the quest into the unknown, a foray into the fundamentally new and untested, it is more important than ever especially in this age of conformity, for belief in science to play a more central role in the practice of science. The biggest scientists in history have always been ones who took leaps of faith, whether it was Bohr with his quantum atom, Einstein with his thought experiments or Noether with her deep feeling for the relationship between symmetry and conservation laws, a feeling felt but not seen. For creating minds like these, we need to nurture an environment that not just allows but actively encourages scientists, especially young ones, to tread the boundary between evidence and speculation with aplomb, to exercise their rational faith with abandon. Marie Curie once said, “Now is the time to fear less, so that we may understand more.” To which I may add, “Now is the time to believe more, so that we may understand even more.”

First published on 3 Quarks Daily

Man as a "machine-tickling aphid"

Philip Morrison on challenges with AI

Philip Morrison who was a top-notch physicist and polymath with an incredible knowledge of things beyond his immediate field was also a speed reader who reviewed hundreds of books on a stunning range of topics. In one of his essays from an essay collection he held forth on what he thought were the significant challenges with machine intelligence. It strikes me that many of these are still valid (italics mine).

"First, a machine simulating the human mind can have no simple optimization game it wants to play, no single function to maximize in its decision making, because one urge to optimize counts for little until it is surrounded by many conditions. A whole set of vectors must be optimized at once. And under some circumstances, they will conflict, and the machine that simulates life will have the whole problem of the conflicting motive, which we know well in ourselves and in all our literature.

Second, probably less essential, the machine will likely require a multisensory kind of input and output in dealing with the world. It is not utterly essential, because we know a few heroic people, say, Helen Keller-who managed with a very modest cross-sensory connection nevertheless to depict the world in some fashion. It was very difficult, for it is the cross-linking of different senses which counts. Even in astronomy, if something is "seen" by radio and by optics, one begins to know what it is. If you do not "see" it in more than one way, you are not very clear what it in fact is.

Third, people have to be active. I do not think a merely passive machine, which simply reads the program it is given, or hears the input, or receives a memory file, can possibly be enough to simulate the human mind. It must try experiments like those we constantly try in childhood unthinkingly, but instructed by built-in mechanisms. It must try to arrange the world in different fashions.

Fourth, I do not think it can be individual. It must be social in nature. It must accumulate the work--the languages, if you will- of other machines with wide experience. While human beings might be regarded collectively as general-purpose devices, individually they do not impress me much that way at all. Every day I meet people who know things I could not possibly know and can do things I could not possibly do, not because we are from differing species, not because we have different machine natures, but because we have been programmed differently by a variety of experiences as well as by individual genetic legacies. I strongly suspect that this phenomenon will reappear in machines that specialize, and then share experiences with one another. A mathematical theorem of Turing tells us that there is an equivalence in that one machine's talents can be transformed mathematically to another's. This gives us a kind of guarantee of unity in the world, but there is a wide difference between that unity, and a choice among possible domains of activity. I suspect that machines will have that choice, too. The absence of a general-purpose mind in humans reflects the importance of history and of development. Machines, if they are to simulate this behavior- or as I prefer to say, share it--must grow inwardly diversified, and outwardly sociable.

Fifth, it must have a history as a species, an evolution. It cannot be born like Athena, from the head full-blown. It will have an archaeological and probably a sequential development from its ancestors. This appears possible. Here is one of computer science's slogans, influenced by the early rise of molecular microbiology: A tape, a machine whose instructions are encoded on the tape, and a copying machine. The three describe together a self-reproducing structure. This is a liberating slogan; it was meant to solve a problem in logic, and I think it did, for all but the professional logicians. The problem is one of the infinite regress which looms when a machine becomes competent enough to reproduce itself. Must it then be more complicated than itself? Nonsense soon follows. A very long

instruction tape and a complex but finite machine that works on those instructions is the solution to the logical problem."

Consciousness and the Physical World, edited by V. S. Ramachandran and Brian Josephson

Consciousness and the Physical World: Proceedings of the Conference on Consciousness Held at the University of Cambridge, 9Th-10th January, 1978

This is an utterly fascinating book, one that often got me so excited that I could hardly sleep or walk without having loud, vocal arguments with myself. It takes a novel view of consciousness that places minds (and not just brains) at the center of evolution and the universe. It is based on a symposium on consciousness at Cambridge University held in 1979 and is edited by Brian Josephson and V. S. Ramachandran, both incredibly creative scientists. Most essays in the volume are immensely thought-provoking, but I will highlight a few here.

Rutherford on tools and theories (and machine learning)

Ernest Rutherford was the consummate master of experiment, disdaining theoreticians for playing around with their symbols while he and his fellow experimentalists discovered the secrets of the universe. He was said to have used theory and mathematics only twice - once when he discovered the law of radioactive decay and again when he used the theory of scattering to interpret his seminal discovery of the atomic nucleus. But that's where his tinkering with formulae stopped.

Time and time again Rutherford used relatively simple equipment and tools to pull off seemingly miraculous feats. He had already won the Nobel Prize for chemistry by the time he discovered the nucleus - a rare and curious case of a scientist making their most important discovery after they won a Nobel prize. The nucleus clearly deserved another Nobel, but so did his fulfillment of the dreams of the alchemists when he transmuted nitrogen to oxygen by artificial disintegration of the nitrogen atom in 1919. These achievements justified every bit Rutherford's stature as perhaps one of two men who were the greatest experimental physicists in modern history, the other being Michael Faraday. But they also justified the primacy of tools in engineering scientific revolutions.

However, Rutherford was shrewd and wise enough to recognize the importance of theory - he famously mentored Niels Bohr, presumably because "Bohr was different; he was a football player." And he was on good terms with both Einstein and Eddington, the doyens of relativity theory in Europe. So it's perhaps not surprising that he pointed out an observation about the discovery of radioactivity attesting to the important of theoretical ideas that's quite interesting.

As everyone knows, radioactivity in uranium was discovered by Henri Becquerel in 1896, then taken to great heights by the Curies. But as Rutherford points out in a revealing paragraph (Brown, Pais and Pippard, "Twentieth Century Physics", Vol. 1; 1995), it could potentially have been discovered a hundred years earlier. More accurately, it could have been experimentally discovered a hundred years earlier.

Rutherford's basic point is that unless there's an existing theoretical framework for interpreting an experiment - providing the connective tissue, in some sense - the experiment remains merely an observation. Depending only on experiments to automatically uncover correlations and new facts about the world is therefore tantamount to hanging on to a tenuous, risky and uncertain thread that might lead you in the right direction only occasionally, by pure chance. In some ways Rutherford here is echoing Karl Popper's refrain when Popper said that even unbiased observations are "theory laden"; in the absence of the right theory, there's nothing to ground them.

It strikes me that Rutherford's caveat applies well to machine learning. One goal of machine learning - at least as believed by its most enthusiastic proponents - is to find patterns in the data, whether the data is dips and rises in the stock market or signals from biochemical networks, by blindly letting the algorithms discover correlations. But simply letting the algorithm loose on data would be like letting gold leaf electroscopes and other experimental apparatus loose on uranium. Even if they find some correlations, these won't mean much in the absence of a good intellectual framework connecting them to basic facts. You could find a correlation between two biological responses, for instance, but in the absence of a holistic understanding of how the components responsible for these responses fit within the larger framework of the cell and the organism, the correlations would stay just that - correlations without a deeper understanding.

What's needed to get to that understanding is machine learning plus theory, whether it's a theory of the mind for neuroscience or a theory of physics for modeling the physical world. It's why efforts that try to supplement machine learning by embedding knowledge of the laws of physics or biology in the algorithms are likely to work, while efforts blindly using machine learning to try to discover truths about natural and artificial systems using correlations alone would be like Rutherford's fictitious uranium salts from 1806 giving off mysterious radiation that's detected without interpretation, posing a question waiting for an explanation.

.jpeg)

.png)